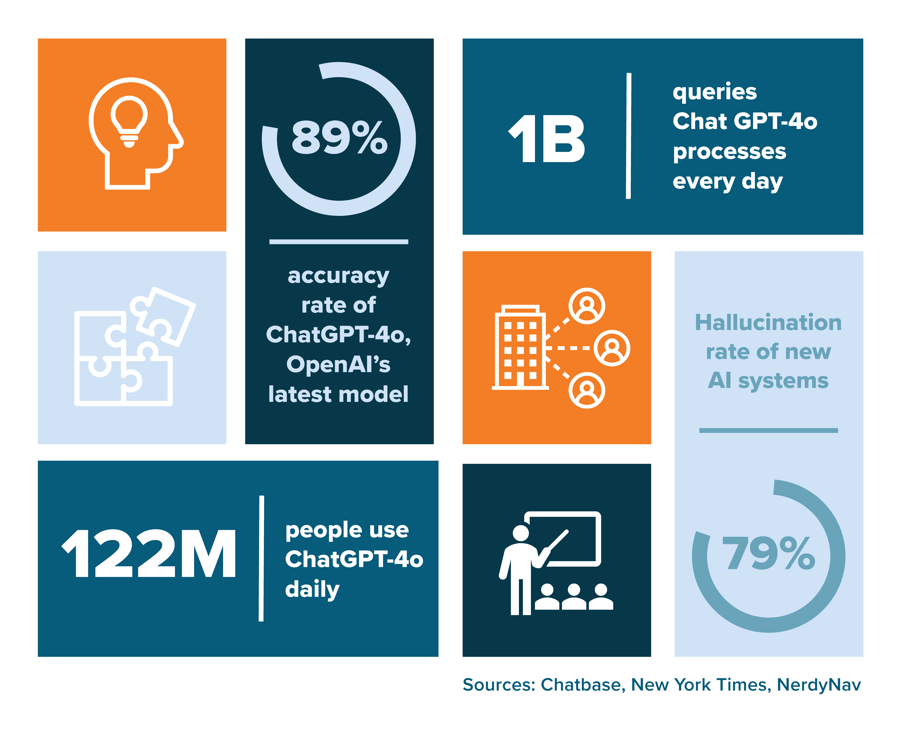

In 2022, OpenAI launched the first publicly available generative artificial intelligence (GAI) tool, ChatGPT. The large language model (LLM) is trained in massive quantities of text data, allowing it to recognize linguistic patterns and make contextual inferences based on a user’s prompt. Now onto model GPT-4o, ChatGPT has amassed “122.58 million daily users, processing over 1 billion queries every day” (NerdyNav). With users becoming more and more comfortable with the use of GAI tools such as ChatGPT, organizations find themselves facing the question: do we let our employees use these tools, and if yes, how?

As a security consultant, my focus has largely been on the Governance, Risk, and Compliance (GRC) space, with heavy emphasis on assisting customers with the maturation of their governance documentation (i.e., policies, procedures, plans). So, of course, my hesitation with artificial intelligence (AI) comes from the question of my own relevance. However, the kinks are far from smoothed over, with human intervention very much still required should AI be used in any professional capacity.

While it may seem enticing to employees and businesses alike to outsource day-to-day tasks to ChatGPT or other LLMs, users should proceed with caution. Despite these tools’ abilities to quickly parse through web pages, public data sets, and other repositories, these models still struggle with accuracy and pose a data risk to companies who allow for their use. Chatbase noted that, “ChatGPT-4o (OpenAI’s latest model) achieves an accuracy rate of 88.7%...[but] accuracy can fluctuate” (Chatbase). Despite its inconsistent responses, the tool can mislead users with its unwavering confidence. Further, LLMs like ChatGPT tend to “just make stuff up, a phenomenon some A.I. researchers call hallucinations. On one test, the hallucination rates of newer A.I. systems were as high as 79 percent” (NYTimes). According to the CEO of a startup that builds and deploys GAI, “they will always hallucinate…that will never go away” (NYTimes).

So, if these GAI tools are prone to inaccuracies and fictional lore, organizations should avoid their use, right? Not exactly. Artificial intelligence is on its way to being engrained in all realms of the technological sphere, from chatbots to search engines to digital art generation. “The biggest tech companies are putting record amounts of money into capital spending, primarily for the hardware needed to develop and run AI models” (The Wall Street Journal). For the sake of aligning with these advancements, companies may want to consider their incorporation – with caution!

The use of AI should be governed through policy, with acceptable and non-acceptable behaviors documented and circulated to all company employees. These policies should note request processes for GAI usage, approved tools, the conditions under which AI use is appropriate, and what types of data can and cannot be used. Employees should be required to acknowledge AI-related policies during annual security awareness and training activities to ensure they understand usage limitations.

Companies should also look to more recently established guidance to assist with the development of their AI programs. The National Institute of Standards and Technology’s Artificial Intelligence Risk Management Framework (NIST AI RMF) and the International Organization for Standardization’s ISO/IEC 42001 have formulated frameworks to “address the unique challenges AI poses…For organizations, it sets out a structured way to manage risks and opportunities associated with AI, balancing innovation with governance” (ISO). Adherence to domestically and internationally recognized frameworks allows for enhanced structure that touches on all facets of security, as decided by professionals across the globe. Frameworks provide a foundation for maturity progression, offering organizations a metric against which they can continuously measure their security program’s success.

So, perhaps your answer to our question is not ‘no,’ but ‘yes and’ where we find ways to say ‘yes’ and do it right. In this instance, doing it right is by being prepared, through documentation, through training and awareness, and through framework alignment. The approach to artificial intelligence should allow for streamlined processes, effective oversight, and collective engagement across all business units. By taking these steps, organizations can set themselves on a path toward AI integration while mitigating the chance of actualized risks associated with GAI use.

How K logix Can Help

K logix consultants are well versed in artificial intelligence-focused frameworks such as NIST’s AI RMF and ISO/IEC 42001. Consultants can conduct assessments to understand the current maturity level of an organization’s AI program while offering recommendations for enhancement and continued alignment with identified frameworks. Further, the consultants at K logix have assisted several customers with the development and maturation of AI-related documentation, such as an AI Acceptable Use Policy. Interested in learning more about how K logix can help you streamline your AI program? Contact info@klogixsecurity.com.